Expert Answer

Comment Answer the following questions

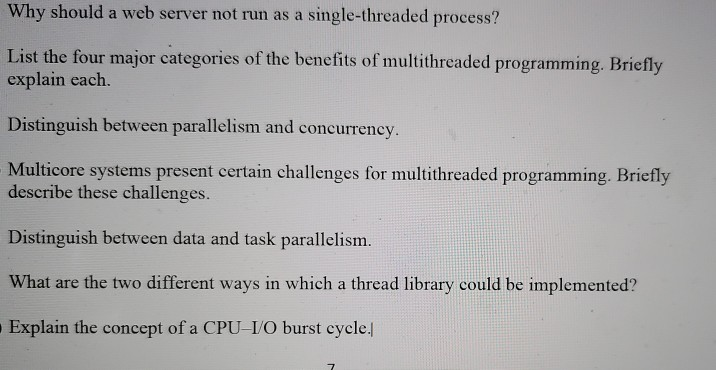

Comment Answer the following questions(1) If web server is rum as a single threaded process then each time a client request comes , it has to make a new process to service the request.If multiple processes are to be made then it would be very time consuming and time inefficient. Before the concept of threads came, single threaded server was made. but If the new process will perform the same tasks as the existing process, why incur all that overhead ?

We all know the benefits of multithreading. If the Web-server process is multithreaded, the server will create a separate thread that listens for client requests. When a request is made, rather than creating another process, the server will create a new thread to service the request and resume listening for additional requests.

(2) The benefits of multithreaded programming can be broken down into four major categories:

(i) Responsiveness :-- Multithreading an interactive application may allow a program to continue running even if part of it is blocked or is performing a lengthy operation, thereby increasing responsiveness to the user. For instance a multithreaded Web browser could allow user interaction in one thread while an image was being loaded in another thread.

(ii) Resource sharing :-- Processes may only share resources through techniques such as shared memory or message passing. Such techniques must be explicitly arranged by the programmer. However, threads share the memory and the resources of the process to which they belong by default. The benefit of sharing code and data is that it allows an application to have several different threads of activity within the same address space.

(iii). Economy. :-- Allocating memory and resources for process creation is costly. Because threads share the resources of the process to which they belong, it is more economical to create and context- switch threads. Empirically gauging the difference in overhead can be difficult, but in general it is much more time consuming to create and manage processes than threads. In Solarisf for example, creating a process is about thirty times slower than is creating a thread, and context switching is about five times slower.

(iv) Scalability :-- The benefits of multithreading can be greatly increased in a multiprocessor architecture, where threads may be running in parallel on different processors. A single-threaded process can only run on one processor, regardless how many are available. Multithreading on a multiCPU machine increases parallelism.

(3) In computer science Parellelism and concurrency are two separate terms.

Concurrency :-- concurrency means multitasking i.e. You have several tasks to perform but the way you schedule them by doing interruptions matters.for eg You have two tasks to perform.sequentially it is going to take a lot of time when doing the first task makes you sitting idle for lot of time eg. standing in a queue. by the time you are sitting idle you perform the task 2 and complete the work. You can assume a single processor executing the process in a time sharing environment. It also increses the response time of the process. Concurrency is achieved through the interleaving operation of processes on the central processing unit(CPU) or in other words by the context switching. that’s rationale it’s like parallel processing. It increases the amount of work finished at a time.

Parallelism :-- Parallelism means independentability i.e. The situation is same here i.e. we have several tasks to perform but this time we perform all the tasks at the same time.suppose we have a assistant and while we perform the task 1 ,we order the assistant to do task 2.Here you can assume multi processors, which are executing the tasks independently.Parallelism related to an application in which the tasks are divided into smaller sub-tasks that are processed simultaneously or parallel. It is used for increasing the throughput and computational speed of the system by using the multiple processors. It is the technique that do lot of things simultaneously.

Concurrency is a illusion of parellelism.We can observe many real life examples such as while we play movie we can increase the sound we generally think that both the tasks are done parellely but they are not parallel rather they are concurrent.

(4) Multicore systems :--- Placing multiple computing cores on a single chip, where each core appears as a separate processor to the operating system.By using multiple cores we can achieve parallelism not concu

In general, five areas present challenges in programming for multicore systems:

Dividing activities. This involves examining applications to find areas that can be divided into separate, concurrent tasks and thus can run in parallel on individual cores.

Balance. While identifying tasks that can run in parallel, programmers must also ensure that the tasks perform equal work of equal value. In some instances, a certain task may not contribute as much value to the overall process as other tasks; using a separate execution core to run that task may not be worth the cost.

Data splitting. Just as applications are divided into separate tasks, the data accessed and manipulated by the tasks must be divided to run on separate cores.

Data dependency. The data accessed by the tasks must be examined for dependencies between two or more tasks. In instances where one task depends on data from another, programmers must ensure that the execution of the tasks is synchronized to accommodate the data dependency.

Testing and debugging. When a program is running in parallel on multiple cores, there are many different execution paths. Testing and debugging such concurrent programs is inherently more difficult than testing and debugging single-threaded applications.

(5) Data parallelism is a way of performing parallel execution of an application on multiple processors. It focuses on distributing data across different nodes in the parallel execution environment and enabling simultaneous sub-computations on these distributed data across the different compute nodes. This is typically achieved in SIMD mode (Single Instruction, Multiple Data mode) and can either have a single controller controlling the parallel data operations or multiple threads working in the same way on the individual compute nodes (SPMD).

Task parallelism focuses on distributing parallel execution threads across parallel computing nodes. These threads may execute the same or different threads. These threads exchange messages either through shared memory or explicit communication messages, as per the parallel algorithm. In the most general case, each of the threads of a Task-Parallel system can be doing completely different tasks but co-ordinating to solve a specific problem. In the most simplistic case, all threads can be executing the same program and differentiating based on their node-id's to perform any variation in task-responsibility. Most common Task-Parallel algorithms follow the Master-Worker model, where there is a single master and multiple workers. The master distributes the computation to different workers based on scheduling rules and other task-allocation strategies.

(6)

Thread libraries may be implemented either in user space or in kernel space. The former involves API functions implemented solely within user space, with no kernel support. The latter involves system calls, and requires a kernel with thread library support.

There are three types of thread library imlemented today :--

- POSIX Pthreads - may be provided as either a user or kernel library, as an extension to the POSIX standard.

- Win32 threads - provided as a kernel-level library on Windows systems.

- Java threads - Since Java generally runs on a Java Virtual Machine, the implementation of threads is based upon whatever OS and hardware the JVM is running on, i.e. either Pthreads or Win32 threads depending on the system.

(7)

A CPU bursts when it is executing instructions; an I/O system bursts when it services requests to fetch information. The idea is that each component operates until it can’t.

A CPU can run instructions from cache until it needs to fetch more instructions or data from memory. That ends the CPU burst and starts the I/O burst. The I/O burst read or writes data until the requested data is read/written or the space to store it cache runs out. That ends an I/O burst.

CPU bursts and IO bursts operate alternatively. A process should have a good mix of CPU and IO bursts.

Process

Burst Time

Priority

P1

8

3

P2

4

1

P3

2

5

P4

3

4

P5

5

2

- Consider the set of processes shown in the table above, with the length of the CPU-burst time given in milliseconds. The processes are assumed to have arrived in the order P1, P2 P3 , P4 , and P5, all approximately at time 0. Draw three Gantt charts illustrating the execution of these processes using FCFS, round-robin (quantum size = 5), and a non-preemptive priority (a smaller priority number implies a higher priority) scheduling. Calculate average waiting time of each scheduling. Calculate average turnaround time of each scheduling.

- What is convoy effect?

- Explain the difference multilevel queue scheduling and multilevel feedback queue scheduling

Expert Answer

Comment

Commenta. gant chatt of FCFS is

process burst time completeion time turn around time waiting time p1 8 8 8 0 p2 4 12 12 8 p3 2 14 14 12 p4 3 17 17 14 p5 5 22 22 17 thus average waiting time is (0 + 8 + 12 + 14 + 17) /5 = 51/ 5 = 10.2

average turnaround time is ( 8 + 12 + 14 +17 + 22) /5 = 73 /5 = 14.6

gantt chart for Round robin ( quantum size = 5 )

process burst time completeion time turn around time waiting time p1 8 22 22 14 p2 4 9 9 5 p3 2 11 11 9 p4 3 14 14 11 p5 5 19 19 14 thus average waiting time is (14 + 5 + 9 + 11 + 14) /5 = 51/ 5 = 10.6

average turnaround time is ( 22 + 9 + 11 +14 + 19) /5 = 73 /5 = 15

gantt chart for non preemptive

process burst time completeion time turn around time waiting time p1 8 17 17 9 p2 4 4 4 0 p3 2 22 22 20 p4 3 20 20 17 p5 5 9 9 4 thus average waiting time is (9 + 0 + 20 + 17 + 4) /5 = 50/ 5 = 10

average turnaround time is ( 17 + 4 + 22 +20 + 9) /5 = 72 /5 = 14.4

b. convoy effect is a phenomena in which operating system slow down due to execution of long process ( process having longer burst time). in this phenomena whn longer burst time process get cpu then all the process behind the longer process serves longer waiting time .

c. the main differance between multilevel queue scheduling and multilevel feedback queue scheduling is -:

in multilevel queue all process got permanently assigned queue based on their size but in case of multilevel feedback queue processes are free to move between the queue.

efficiency of multilevel queue is less compared to multilevel feedback queue because of its static in nature.

ليست هناك تعليقات:

إرسال تعليق